The usual four white walls, but in each corner a screen, surveilling the gallery. Normally the display is pale pink but at times it flicks to grey, indicating that someone somewhere is watching. It could be you, at home, clicking on the website for Octopus (2020), the installation project by Trevor Paglen that allowed the stay-at-homes, the voyeurs, the disabled, the bored, the ill, the curious – anyone online – to look in on Bloom, Paglen’s exhibition at the Pace Gallery in London, which ran from September until the latest lockdown forced it to close prematurely this month. The website gave you the choice – ‘allow pacegallery.com to use your camera?’ – to turn your laptop’s own eye on you. Say yes, and your face would be beamed onto one of the gallery’s screens, visible to anyone wandering the room. On the evidence, most people’s instincts screamed no. The monitors were almost always a blank grey: someone was watching but they didn’t want to show their face. I visited the show in person just once – stepping over the cables snaking around the space, each connected to a hi-def video camera trained at the works on display – but I kept returning to the website, and I let the computer do its thing: the green recording light above my screen switched on and I could see myself projected into the gallery as visitors paced below. I was the watcher watched, watching myself being watched – a delirious mise en abyme.

There’s nothing unfamiliar about remote video monitoring: it’s been around since 1942, when the engineer Walter Bruch invented CCTV in order to observe V-2 rocket launches live. An American company, Vericon, bought the technology and promoted it in the pages of Popular Science in 1949. But it took seventy years of development – tapes, multiplexing, digital recording, the internet, ANPR, facial recognition, behavioural analysis by AI – for the technology to explode: it’s estimated that next year there will be a billion fixed surveillance cameras worldwide, one for every seven people on the planet.

An art gallery, anyway, is a monitored space: attendants in every room, CCTV covering every angle. Remote surveillance creeps into the imagination too: heists, laser alarms, How to Steal a Million. You always feel watched. But at the Pace Gallery you felt it more. You turned a corner and the video feed from one of the cameras following you came up on a screen on the wall, incorporating you into a work called ImageNet Roulette. As you approached, a green square appeared around your image on the display: you had been selected and bounded, as in the targeting systems of drone operations. Your image was identified by a machine learning algorithm and given a label. The camera saw me and gave me a name: ‘old man’. I took a step and was labelled again: ‘young buck, young man’. With each movement the algorithm flickered between categories: I was ‘sleeper, slumberer’ – right – then, for an epiphanic flash, ‘Pharaoh, Pharaoh of Egypt’, before I was ‘old man’ again.

There is, of course, no justice in the algorithm. In the distance behind me a (black, male) gallery attendant walked past and was identified as ‘charwoman, char, cleaning woman, cleaning lady, woman’. And ‘sweeper’. The algorithm at work here – developed under the direction of Paglen and Kate Crawford, who researches the social implications of AI – is trained on a dataset called ImageNet, a project launched in 2009 by computer scientists at Stanford and Princeton (and now ended) that was designed to recognise objects of any kind and fit anything it saw into one of more than thirty thousand categories, from ‘abacus’ to ‘zebra’. It turned out that the system had particular and controversial difficulty with the subcategory ‘person’, which itself was divided into nearly seven thousand sub-subcategories, among them ‘homosexual, homophile, homo, gay’ – under which you would find the further labels ‘gay man, shirtlifter’, then ‘faggot, fag, fairy, nance, pansy, queen, queer, poof’ or ‘closet queen’. Paglen’s installation is meant to demonstrate the biases inherent in machine intelligence systems, invariably programmed in by the people who make them. As he puts it online – correctly, alarmingly – ‘norms, classifications and categories always have a politics to them … What kinds of judgment are built into technical systems? Why are they made that way, who are they benefiting, and at whose expense?’

These are the right questions to ask but the answers will be complicated. It’s worth pointing out, to Paglen for instance, that it’s in the interest of those who benefit – data aggregators, Silicon Valley behemoths, insurance companies, police agencies, the NSA, GCHQ – that the knowledge they derive from the massive amount of information they have gathered is as accurate as possible. Once you’ve recognised bias you can correct for it, and correcting themselves is how machine learning algorithms work: bad result, feed back in, tweak algorithm, get better result. It’s to the benefit of the owners of such algorithms that their mistakes – ‘Daniel is a pharaoh’ – are eliminated, and in 2020 they are: if I were one of China’s 1.4 billion citizens any surveillance camera I pass would tell those interested exactly who I am. These days, category errors aren’t made.

What Paglen shows instead is a compressed – and important, and untold – history of the efforts that got us here. In the centre of the room at the Pace Gallery was The Standard Head (2020), a two metre-high model in white lacquered foam derived from the work of Woody Bledsoe, a CIA-funded researcher at the University of Texas in the 1960s, who set out to measure and define a normative face to be used in early attempts at facial recognition: deviations from his norm could be used to identify people by their differences. To make his sculpture – an accurate 3D model of the head which Bledsoe would have built if he could – Paglen had to dig through Bledsoe’s archives. One of the things he learned was that the identikit ‘standard head’, which presumably became the property of the CIA, was generated by averaging the facial measurements of the subjects Bledsoe had to hand: volunteer students at the University of Texas – ‘basically all young white dudes’. The faultiness of the model, and its built-in racism, were really a feature of the times: the dataset available to feed the composite was limited by the researcher’s assumptions about what ‘average’ meant and by the ‘average’ subjects his institution provided.

But that doesn’t stop the sculpture being eerie: it represents the unreal idea of a real ordinary person. In the gallery you can easily pass it by: a smooth white form, absolutely uninteresting in surface, it could have been made by the sculptor Marc Quinn. But Paglen – born in Maryland in 1974, the son of a US air force doctor, raised on military bases around the world, resident of Brooklyn and Berlin – couldn’t be further from a formerly Young British Artist like Quinn, whose subjects included the dysmelic and pregnant Alison Lapper and the supermodel Kate Moss. The Standard Head condenses the results of a significant exercise in research and analysis. Everything on display in the Pace exhibition had a double effect: unchallengingly but pleasingly attractive on the surface, designed to sit comfortably within four white walls, yet encoding a message you had to work to discover. The pretty pictures of flowers that make up the series ‘Bloom’ were constructed by photographing segments of plants with a high-resolution camera and running the pictures through an image recognition program before applying an algorithm to colourise and multiply the blossoms into uncannily impossible abundance. What from a distance looks like a woven tapestry turns out to be Classifications of Gait, a grid of tiny photographs taken from a database used to train computers to identify people by the way they walk. These works are information sublimated: the learning they represent could be conveyed in words – thousands of them – but as images they’re wired direct to the brain.

Paglen has been gathering information throughout his career, with a doggedness and thoroughness that should put almost any investigative journalist to shame – including those whose field of interest, like his, takes in surveillance, secrecy and hidden military operations. In his book Blank Spots on the Map: The Dark Geography of the Pentagon’s Secret World (2009), Paglen revealed something of the way his mind works. His preoccupation with military affairs began early: thanks to his father’s postings, he thought there could be no greater future for him than to be a fighter pilot. But instead he started digging, and it’s extraordinary how much he has continued to find. The chapter ‘Unexplored Territory’ tells of the week he spent in a hotel room near the top of the Tropicana Island Tower in Las Vegas, equipped with a high-powered telescope, eye-pieces, tripod, camera and a radio scanner, all in order to observe and record – at every time of day and night – the comings and goings at McCarran Airport below. From McCarran, unmarked Boeing 737s and King Airs ferry workers and air force personnel to secret military installations across the Nevada desert, including the three million-acre tract of the Nellis Air Force Range, an area whose details are obscured on publicly available maps. By logging flight numbers and eavesdropping on radio chatter, Paglen was able to fill in some of what the Pentagon wanted to keep blank.

But it isn’t just the legwork Paglen puts in that has made it possible for him to pursue such projects as Sites Unseen, an exhibition at the Smithsonian in 2018 that included imagery of nodes in the vast network of America’s military-technological infrastructure: undersea cables, spy satellites, an overhead night-time shot of NSA headquarters at Fort Meade, Maryland, and of the National Reconnaissance Office in Chantilly, Virginia. There’s also something special about his understanding of what blank space signifies: long before it was easy to trawl the web for aerial photos of any part of the globe, Paglen spent his time as a student in the basement library of UC Berkeley’s geography department, methodically working through the image archive of the United States Geological Survey. This exercise led to the realisation that when someone has something to hide, the gap where that something should be – on a map, in a redacted document – reveals the very shape of the secret. The act of erasure is also a form of disclosure. ‘Blank spots on maps outline the things they seek to conceal. To truly keep something secret, then, those outlines also have to be made secret. And then those outlines, and so on.’ Ad infinitum.

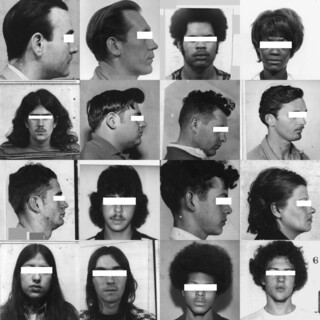

The doors of the Pace Gallery are shut now, but at the Carnegie Museum of Art in Pittsburgh you can still catch Paglen at work, with an exhibition – Opposing Geometries (until 14 March 2021) – that continues his latest investigations into what machines see and what they don’t, including a piece called They Took the Faces from the Accused and the Dead . . . (SD18), in which he has suggestively blacked out identifying details from three thousand mugshots taken from an early facial recognition database. The show goes on.

Send Letters To:

The Editor

London Review of Books,

28 Little Russell Street

London, WC1A 2HN

letters@lrb.co.uk

Please include name, address, and a telephone number.