Fool the Discriminator

Liam Shaw

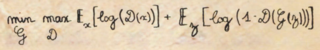

Last week Christie’s sold at auction a portrait ‘created by anartificial intelligence’ for $432,500. The canvas from the art collective Obvious was described as a portrait of the fictional ‘Edmond Belamy’, and signed with an equation:

It expresses the concept underlying the class of machine-learning algorithms known as Generative Adversarial Networks (GANs), which were used to produce the portrait. GANs were first outlined by researchers led by Ian Goodfellow in 2014. The name ‘Belamy’ is an affectionate homage, ‘bel ami’ being a loose translation of ‘good fellow’.

Hugo Caselles-Dupré, one of the members of Obvious and a computer science PhD student, explained the idea behind GANs as a competition between two algorithms, a Generator and a Discriminator:

We fed the system with a data set of 15,000 portraits painted between the 14th century to the 20th. The Generator makes a new image based on the set, then the Discriminator tries to spot the difference between a human-made image and one created by the Generator. The aim is to fool the Discriminator into thinking that the new images are real-life portraits.

The Generator will eventually produce images close enough to the real-life portraits for the Discriminator to have only a 50:50 chance of distinguishing them. Viewed as an optimisation algorithm to a data distribution, it isn’t very compelling, but as an analogy for the relationship between artist and critic it’s hard to resist, which is surely part of the reason for Obvious’s success.

The computer hardware required to run these networks is expensive. Graphics Processing Units (GPUs) can be optimised for the intensive calculations required, but they don’t come cheap. Portrait of Edmond Belamy is low-resolution because that’s about as far as a bunch of amateurs can stretch. As Caselles-Dupré complained in an interview:

With the big GAN papers, it's like, ‘OK, it requires like 512 GPU cores,’ something that we don't have, we don't have the budget for this … So yeah, we want to do this innovative stuff, but we've got to start somewhere to get some financing and continue working, having some credibility, having opportunities to get to access to more computational power.

Whatever Obvious’s aims, Christie’s proclaimed the sale as the birth of ‘a new medium’ that raised questions about authorship: human or algorithm? But it soon became apparent that Obvious had produced the portrait using code written by other AI artists, and other, more prosaic questions of authorship arose.

Robbie Barrat has written and made available several repositories of code to produce art from GANs, including most of the code used by Obvious. ‘Am I crazy for thinking they really just used my network and are selling the results?’ he asked. Others saw the sale as a betrayal of the open-source ethos of the AI art community; Caselles-Dupré had repeatedly asked Barrat for assistance and modifications to the code in late 2017 without saying that they planned to sell the results.

‘I wonder why they missed the opportunity to declare their work as an AI-readymade and bring us the first digital Duchamp,’ Mario Klingemann commented. Unlike Duchamp, however, it seems that Obvious weren’t setting out to be provocative. Unprepared for their success, they have since been hastily trying to clarify that they greatly admire those whose code they used.

The Obvious debacle is a curious reflection of the Fountain episode. Duchamp submitted his readymade urinal, signed ‘R. Mutt’, to the Society of Independent Artists to test the rule that all who paid their fee could exhibit. Rather than rejecting it outright, the Society quietly removed it from the show. Christie’s reaction to the Portrait of Edmond Belamy couldn’t have been more different. The auction house exhibited and sold a low-resolution inkjet print produced from open-source code freely available online, seizing the opportunity to proclaim it a historic first and open a lucrative new market.

Having playfully transferred authorship to the algorithm to attract attention, Obvious are now experiencing the loss of authorship that comes once a work of art is public. ‘We totally understand that the whole community around AI and art took this as a really bad thing,’ Caselles-Dupré has said, ‘and now we agree with them, but we can't really convey this message because we are being a bit misrepresented.’ Duchamp wouldn’t have been surprised. ‘Obviously any work of art or literature, in the public domain,’ he wrote in 1956, ‘is automatically the subject or the victim of such transformations.’

Comments

-

2 November 2018

at

5:53pm

JWA

says:

But was it bought by another alogrithm?

-

2 November 2018

at

5:53pm

JWA

says:

@

JWA

*algorithm

-

2 November 2018

at

9:07pm

David Sharp

says:

Artificial intelligence doesn't exist. It's just another media bubble, like the nonsense about the so-called "millennium bug" in the run-up to the year 2000, or the more recent silliness over Google's "glass" spectacles, which when the big day came, almost nobody wanted.

-

3 November 2018

at

11:32am

Ally

says:

@

David Sharp

>It’s just another media bubble, like the nonsense about the

-

4 November 2018

at

9:59pm

thebears

says:

@

Ally

I don't disagree that current "AI" as described here is not AI (and wholly agree we should turn them off if they were!).

-

5 November 2018

at

4:07am

Graucho

says:

@

thebears

Computers always were and always will be high speed morons. The remarkable thing that has happened over my lifetime is just how high speed they have become.

-

8 November 2018

at

8:23pm

David Sharp

says:

@

thebears

I agree with you on the terrifying power of this technology, which is already being very widely used, and is brilliantly analysed in Virginia Eubanks's book "Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor" (https://us.macmillan.com/books/9781250074317)

-

3 November 2018

at

9:06am

Ally

says:

Readymades such as Fountain appear to have been generated through a multi-agent process too, with that instance attributed to Duchamp produced by either Elsa von Freytag-Loringhoven or by Louise Norton (later Varèse). OpenCulture recently had an interesting article and discussion: [http://www.openculture.com/2018/07/the-iconic-urinal-work-of-art-fountain-wasnt-created-by-marcel-duchamp.html].

-

3 November 2018

at

11:34am

Liam Shaw

says:

@

Ally

That's a great article, thanks for sharing and correcting my omission. I'd love to know more about the messy history of the Dada scene. The discussion of authorship on the Tate's page on Fountain is also well worth reading: "Unfortunately, the records of the Society of Independent Artists are of no help here, as most were lost in a fire."

-

4 November 2018

at

4:45am

Timothy Rogers

says:

@

Liam Shaw

A good book about the messy Dada scene and all of its messy personalities is William Rubin's "Dada and Surrealism and Their Heritage", available in several editions. I had it as assigned reading for a college art history course I took back in the 1970s. Duchamp was more cerebral, philosophical, and even-tempered than most of his peers in the visual arts, so his comments on his own works avoid self-promotion, a rarity in that competitive world.

-

4 November 2018

at

11:34pm

Timothy Rogers

says:

After re-reading the blog and comments, I think that the "readymade" classification (or comparison) is a little bit off. The printed image is not a found object relocated to a frame or pedestal and neither it is a collage constructed of such objects. Rather it is a mechanically determined "statistical average" of thousands of paintings (which presumably had to be mapped by as many as a million pixels of content per painting). This averaging probably accounts for both its blurriness and the fact that it can't be assigned to one gender or another. A typical element of portrait imagery over hundreds of years is the white shirt or blouse spilling over the black gown or coat of the portrayed (seen in portraits by Hals, Ingres and the works of hundreds of painters in between them). It's interesting that a painter like Gerhard Richter might have produced a blurry painting like this and called it "generic portrait", and the possibility of future painters doing something like this (imitating machine-made paintings) raises the idea of an infinite regression (or dialectic) between humans and the machines they make - critics would lap this up, but it might not produce anything of real interest after the first time it was done. As to the auction price, we have no idea if it actually represents damage to somebody's bank account or is merely a prearranged bid made to publicize the whole show.

-

8 November 2018

at

7:20pm

Seth Edenbaum

says:

The "debacle" concerns money. For the rest the art is "conceptual", and unless the piece is dated as a first example of X the market will go nowhere.

Read moreIf there were really artificial intelligences out there, they would be capable of reproducing themselves, and outwitting humans, rather than sitting passively in dumb machines waiting for one of us to key in the instructions they need. And if they really existed, we'd all be terrified. Our first instinct would be to switch them off, immediately. And that would be the right thing to do.

>so-called “millennium bug” in the run-up to the year 2000

Never did I rewrite so much code (or make so much in overtime) as in 1998-9, to amend 25 year old scheduling programs whose original date-handling deviously minimised storage digits and processing. Along the way came horrible mishaps and ugly fixes. But the main lesson from dry run exercises was how to identify, contain and recover from problems without attracting attention, and that continued into the following year. Nobody wanted to be caught in the headlights of the “media bubble” but that doesn’t mean that the whole exercise was a hysteria.

But this isn't *just* media hype - there's a real transformation happening, with little understanding or regulation from the public or governments. Adaptive algorithms, combined with massive datasets, do let us do stuff we couldn't do before (for both good and ill), and we are giving them huge power to make significant (and opaque) decisions about people's lives. The connection between some of these algorithms and those big datasets also gives monoply power to companies like Apple, Google, and Facebook, in a way that so far has defied regulators...this problem could be more acute in other near future sectors such as healthcare. Adaptive algorithms can also do stuff that only humans could do previously, which is also likely to be profoundly disruptive - we don't need real AI to have self-driving cars (another technology that isn't quite as close as companies selling the concept would like, but may not be so far away either).

The fact that this punitive, socially-controlling technology is being lauded as "artificial intelligence" adds insult to injury – and the injury is already great.

(Unless the LRB search engine is playing tricks on me, Eubanks's work has yet to be mentioned therein)

It does seem very appropriate that the most influential artwork of the twentieth century (as voted by 500 top British Discriminators in 2004) has no uniquely identifiable Generator.

https://www.artsy.net/article/artsy-editorial-salvador-dali-accidentally-sabotaged-market-prints