In John Lanchester’s novel Mr Phillips, the hero, a newly redundant accountant, is taken hostage during a bank robbery. Lying face down on the ground, he passes the time rehearsing a conversation he’d had with his former colleagues about the statistics of the National Lottery. The chance of winning is about one in fourteen million, which is much lower than the risk of dying before the week’s lottery is drawn. The accountants wondered how close to the draw you would need to buy a ticket for the chance of winning to be greater than the risk of dying. The answer is about three and a half minutes.

The calculation is straightforward. Of the fifty million people in England, about half a million die each year, which is about ten thousand a week. The probability of dying during a typical week is therefore about one in five thousand: three thousand times higher than the one-in-fourteen-million chance of winning that week’s lottery. The probability of dying and the probability of winning are therefore the same when the ticket is bought one three-thousandth of a week before the draw, which is about three and a half minutes. A more sophisticated calculation takes age into account, in which case a 75-year-old needs to buy a ticket 24 seconds before the lottery is drawn, but a 16-year-old could risk buying one an hour and ten minutes before.

It is an amusing calculation, but as an approach it makes many people uncomfortable, not least because their own death or that of a friend or relative seems not to be properly accounted for by a probability: an individual, after all, is either alive or dead. But as the undertaker and poet Thomas Lynch has said, although individually each body is either in motion or at rest, en masse the picture is very different: ‘Copulation, population, inspiration, expiration. It’s all arithmetic – addition, multiplication, subtraction and long division.’ So different, in fact, that comforting certainties start to emerge from the apparent chaos of individual births and deaths: ‘There is a balm in the known quantities, however finite. Any given year . . . 2.3 million Americans will die . . . 3.9 million babies will be born.’

The statistical approach to birth and death came to the fore with a study published in 1710 by the Scottish physician and statistician John Arbuthnot, who showed that in London for every single year from 1628 to 1710 more boys were born than girls: extraordinarily unlikely if boys and girls were equally likely. That result has been replicated repeatedly in most countries and most years, making it a pretty safe bet for this year and next year as well – so safe a bet that I defy you to find a bookmaker who would accept it. Arbuthnot himself explained the imbalance by invoking Divine Providence. Because men are more likely to die (‘the external Accidents to which males are subject . . . make a great havock of them’), ‘the wise Creator brings forth more Males than Females.’

In the 19th century, Adolphe Quetelet, a Belgian, applied the method of probabilities to a whole range of social phenomena. Just as Laplace had successfully built a celestial mechanics from Newton’s laws of motion, so Quetelet tried to develop a ‘social physics’ based on the laws of large numbers, finding immutable patterns in social phenomena, and deriving large-scale regularity from local chaos. Divine Providence was soon forgotten, as it transpired that not only were births and deaths remarkably constant for each society, but so also were the number of murders, thefts and suicides and even, as Laplace showed, the number of dead letters in the Parisian postal system.

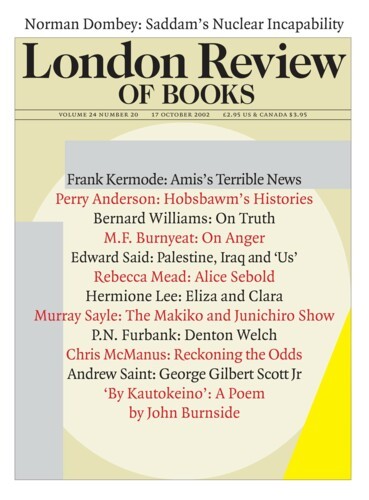

People do not find probabilities threatening only because they have epistemological doubts about the application of broad-brush, quasi-physical laws to individual mortality: many people dislike reasoning with numbers, frequently make errors in their use, and feel anxious when forced to use them. Reckoning with Risk is about that fear of numbers, about ways in which numbers can be more easily interpreted, and about the ways those who are ignorant of numbers can be manipulated to their disadvantage by those who do understand them. Consider two stories told by Gerd Gigerenzer.

The sales of a company fall by 50 per cent between January and May. Between May and September sales increase by 60 per cent. Is the company in better shape in September than it was in January? Down by 50 per cent and up by 60 per cent sounds to many people like an overall 10 per cent gain. But it’s not. Percentages are not symmetrical: going up and going down are not the same. Relative to January, the September sales figures are 20 per cent lower (£1 million down to £500,000 in May, up to £800,000 in September).

If the fallacy isn’t clear, here’s a more concrete example. In the 1970s, the Mexican Government wanted to increase the capacity of an overcrowded four-lane highway. They repainted the lanes, so that the road now had six. Not surprisingly, the repainted road with its narrower lanes was far more dangerous, and after a year of increased fatalities the road was repainted once again as a four-lane highway. The Government then claimed that since capacity had originally been increased by 50 per cent (four to six lanes) and then reduced by 33 per cent (six lanes to four lanes), the capacity of the road had, overall, been increased by 17 per cent (50 minus 33).

Consider another, slightly more complicated scenario. A friend or a relative is told she has a positive mammogram. Does this mean that the person undoubtedly has breast cancer? Does it mean there is a high likelihood that she has breast cancer? Does it mean there is even a moderate probability she has breast cancer? To help you answer these questions you receive the following information, which is typical of that provided to people being screened. And in case you feel tempted to dispute the facts, these figures are typical of those from a number of carefully conducted studies: ‘The probability that a woman of 40 has breast cancer is about 1 per cent. If she has breast cancer, the probability that she tests positive on a screening mammogram is 90 per cent. If she does not have breast cancer the probability that she nevertheless tests positive is 9 per cent.’ What then is the probability that a 40-year-old woman with a positive mammogram actually has breast cancer?

It’s not easy. I’m a doctor, I teach multivariate statistics, I set questions such as this for postgraduate exams; but even though I can work it out, I still have no intuitive sense of what the correct answer is. I’m not the only one. Gigerenzer gave questions such as this to experienced clinicians who deal with these matters all the time and they had no idea either. He could see the sweat on their brows as they tried to beat these few simple numbers into shape and knew that they were failing. Eventually, most of the doctors told him that there was about a 90 per cent probability that a woman with a positive mammogram had breast cancer.

That answer is very wrong. The correct answer is actually about 10 per cent. In other words, about nine out of ten women who are told their mammogram is positive will eventually be found not to have breast cancer. Notice something I have done there. As Gigerenzer recommends, I have translated a statement in terms of probabilities or percentages into what he calls ‘natural frequencies’. Let’s try the problem again, but this time using natural frequencies. Nothing is different about the mathematics; the only difference is in the presentation. ‘Think of 100 women. One has breast cancer, and she will probably test positive. Of the 99 who do not have breast cancer, nine will also test positive. Thus a total of ten women will test positive.’ Now, of the women who test positive, how many have breast cancer? Easy: ten test positive of whom one has breast cancer – that is, 10 per cent. Why on earth was the original question so difficult?

Much of the problem here is in dealing with probabilities. Think of a comparable problem, couched more in logical terms. ‘Most murderers are men’ is hardly controversial. Does that mean then that ‘most men are murderers’? Hardly. The logical structure of the breast screening problem is identical. ‘Most women with breast cancer have a positive mammogram’ is not disputed. But it doesn’t mean that ‘Most women with a positive mammogram have breast cancer.’

The problem resides in the base rate. There are very many more men than there are men who are murderers, which means the word ‘most’ has to be treated with great care. Similarly there are very many more women than there are women with breast cancer. Any screening test has a ‘false positive rate’ – cases that look positive but are not. For rare conditions there are very many more false positives than true positives. And for breast cancer, nine out of ten positives will be false.

Beneath all such calculations is the concept of probability, a mathematical tool which is remarkable not least because, as Ian Hacking has shown, it was developed remarkably late in the history of Western thought. There is no hint of probabilistic reasoning in classical Greek thought, and even in the 16th century the Italian mathematician Girolamo Cardano could claim that when a dice is rolled each face will occur exactly once in every six rolls. The claim is all the more bizarre because this is the classic gambler’s fallacy, and Cardano was an inveterate gambler. It was only in a series of letters about gambling exchanged between Pascal and Fermat in 1654 that the modern theory of probability was born.

Nowadays we live in a world saturated with probabilities and statistics, with risks and uncertainties, and yet most people are very bad at processing the information. Gigerenzer proposes two theories to explain the difficulty we have with probabilities. One is the now standard gambit in evolutionary psychology of suggesting that if people find a task difficult it is because the human brain evolved in a world in which that skill was not required: a difficult theory to prove. Nevertheless, there is fairly good neuropsychological evidence that the human brain has what Brian Butterworth calls a ‘number module’, which is good only at certain types of calculation – essentially those involving whole numbers. Gigerenzer goes on to argue that as long as calculations are converted to whole numbers they feel natural and can be readily processed, since ‘minds are adapted to natural frequencies.’ That is the justification for the second way of presenting our earlier screening problems (‘one woman with cancer tests positive and nine women without cancer also test positive’).

Gigerenzer also suggests that much of the problem with conventional probability theory is in the representation of the problem. Take a simple multiplication such as 49 x 17 = 833. At school we were taught to solve this problem by breaking it down into its components, multiplying the 49 first by the ‘ones column’ (the 7) and then by the ‘tens column’ (the 1), and ensuring that numbers greater than ten are ‘carried’ properly. This method works because the numbers are represented in the Arabic system of decimal numbers, which readily allows them to be taken apart, their relatively simple components handled separately, and then put back together again at the end. It may be tedious, but it works, we can see that it works and we can, with practice, handle more complicated tasks such as 495643 x 178542. As Gigerenzer would emphasise, it is the algorithm implicit in the representation that does the calculation. As long as we trust in the representation, then it does everything for us. Use another representation and the calculation is near impossible. Translate the sum into Roman numerals, for example: is it true that XLIX x XVII = DCCCXXXIII? For us the only way to find out is to convert the numbers into decimals, do the calculation, then convert the numbers back again. (The Romans didn’t have that luxury.) One can see why the Arabic system of representing numbers revolutionised mathematics, particularly when the calculations required a zero.

Gigerenzer’s method of natural frequencies is not the only approachable way of representing the breast cancer screening data. Ronald Fisher, the great statistician, described how he solved most problems by visualising them geometrically, after which it would simply be a matter of ‘reading off’ the solution. Having convinced himself that he had the right answer, he had to translate the calculation into the formal algebra which the journals and his colleagues required. Many scientists are similar: give them a graph or a diagram and a previously obscure problem becomes obvious. The breast cancer problem can also be represented geometrically. Each of the hundred circles on the diagram is a woman who has been screened. One of them, marked in black, has breast cancer. Ten of them, marked with a ‘+’, and including the one with breast cancer, test positive. It is easy to see that nine out of ten women who screen positive do not have breast cancer.

Gigerenzer, a cognitive scientist with an imaginative approach both to theory and to experimentation, has set out to write a popular, accessible book. His broad theme is that probabilities are often misunderstood, but that those misunderstandings could be avoided more often were professionals to represent risk and uncertainty more clearly. He illustrates this in relation to a range of topics: the problem of informed consent; counselling before an HIV test (one of his postgraduate students went for a test at twenty different German clinics and was misinformed in most of them); the O.J. Simpson case (where Gigerenzer clearly separates the question of how likely it is that someone who batters his wife will subsequently murder her – not very, it seems – from the question of how likely it is, given that a woman has been murdered, that she has been murdered by the person who previously battered her: pretty high by all accounts); and the problems of communicating the complexities of DNA fingerprinting to judges, lawyers and jurors, many of whom seem to be virtually innumerate. The book is at times a little discursive, but its quality is not in question; nor is there any doubt that the problems it raises are important ones that are likely to become more so in the future.

Send Letters To:

The Editor

London Review of Books,

28 Little Russell Street

London, WC1A 2HN

letters@lrb.co.uk

Please include name, address, and a telephone number.